Ultimately, we settled on the BlueRobotics BlueROV2 shown on the left, a nice compromise between a kit and a finished, commercial product. Although the robot came unassembled, all of the individual components were already made and tested, meaning that the user only had to put the pieces together, which also only took about seven hours - compared to the double digits required for the aforementioned kits. Much of the hardware, electronics, and software was open source, with anything proprietary being heavily documented online. In addition, all of this was easily accessible and somewhat receptive to further modification (BlueRobotics sells additional sensors and accessories, obviously with instructions on how to integrate them, but anything custom is up to the user.)

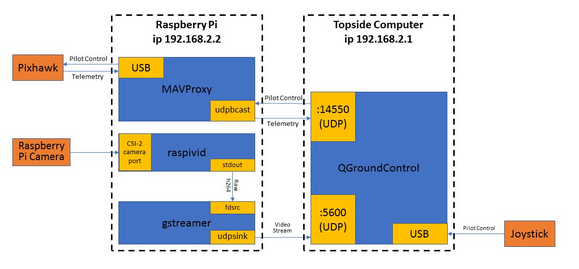

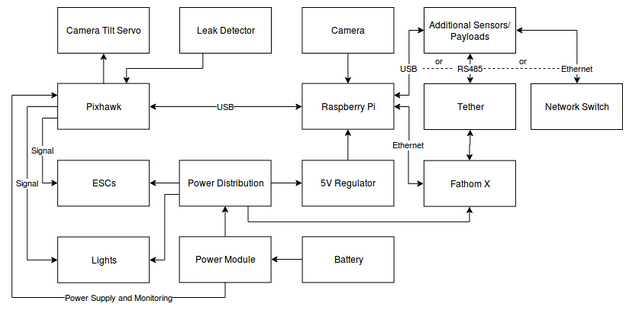

As previously mentioned, the software and electronics on the BlueROV2 is open source. Specifically, the autopilot software is ArduSub, a derivative of the widespread ArduPilot software used on aerial drones, run on a Pixhawk autopilot board. The Pixhawk is also connected to a Raspberry Pi 3, which acts as a companion computer, a device that receives vehicle data from the autopilot for further use, usually on some sort of ground control station (GCS) software. This data is then transmitted through the robot's tether to a topside computer, which then displays this data via QGroundControl, a GCS software. The software stack and a generalized hardware flowchart are shown below.